안녕하세요.

오늘은 Google에서 만든 Machin Learning Solution인 MediaPipe를 MacOS에 설치하는 방법과 FaceDetection Solution을 Android에 적용하는 방법을 말씀드리겠습니다.

1. Installing on macOS

작성 일자 : 2020. 08. 11 (화)

참고 링크 : https://google.github.io/mediapipe/getting_started/install.html#installing-on-macos

1.1. Prework:

1.2. Checkout MediaPipe repository.

|

$ git clone https://github.com/google/mediapipe.git |

1.3. Install Bazel.

Option 1. Use package manager tool to install Bazel

|

$ brew install bazel |

Option 2. Follow the official Bazel documentation to install Bazel 2.0 or higher.

1.4. Install OpenCV and FFmpeg.

Option 1. Use HomeBrew package manager tool to install the pre-compiled OpenCV 3.4.5 libraries. FFmpeg will be installed via OpenCV.

|

$ brew install opencv@3 |

Option 2. Use MacPorts package manager tool to install the OpenCV libraries.

|

$ port install opencv |

Note: when using MacPorts, please edit the WORKSPACE, opencv_macos.BUILD, and ffmpeg_macos.BUILD files like the following:

|

new_local_repository( |

1.5. Make sure that Python 3 and the Python “six” library are installed.

|

$ brew install python |

1.6. Run the Hello World desktop example.

|

$ export GLOG_logtostderr=1 |

If you run into a build error, please read Troubleshooting to find the solutions of several common build issues.

2. Building MediaPipe Examples

작성 일자 : 2020. 08. 11 (화)

참고 링크 : https://google.github.io/mediapipe/getting_started/building_examples.html#android

2.1. 전제조건

- Java Runtime.

- Android SDK release 28.0.3 and above

- Android NDK r18b and above

2.2. Build with Bazel in Android Studio

To incorporate MediaPipe into an existing Android Studio project, see these instructions that use Android Archive (AAR) and Gradle.

3. MediaPipe Android Archive

MediaPipe Android Archive (AAR) library는 Android Studio 및 Gradle과 함께 MediaPipe를 편리하게 사용할 수 있는 방법이다.

3.1. Steps to build a MediaPipe AAR

1. Create a mediapipe_aar() target.

만약 MediaPipe_Face Detection을 AAR로 빌드 하려면

/path/to/your/mediapipe/mediapipe/examples/android/src/java/com/google/mediapipe/apps/aar_face_detection 경로에 BUILD 파일을 생성하고 아래 코드를 입력

|

load("//mediapipe/java/com/google/mediapipe:mediapipe_aar.bzl", "mediapipe_aar") |

2. Run the Bazel build command to generate the AAR

|

bazel build -c opt --host_crosstool_top=@bazel_tools//tools/cpp:toolchain --fat_apk_cpu=arm64-v8a,armeabi-v7a --linkopt="-s" \ //mediapipe/examples/android/src/java/com/google/mediapipe/apps/aar_face_detection:mp_face_detection_aar |

✅ --linkopt="-s"

bazel을 build 하여 aar file을 만들 때 aar file size를 줄이이 위해 사용하는 옵션으로 90MB이상의 용량을 절약할 수 있다.

3. mp_face_detection_aar.aar file 위치

/path/to/your/mediapipe/bazel-bin/mediapipe/examples/android/src/java/com/google/mediapipe/apps/aar_face_detection

3.2. Steps to use a MediaPipe AAR in Android Studio with Gradle

1. Start Android Studio and go to your project.

2. Copy the AAR into app/libs.

3. Make app/src/main/assets and copy assets (graph, model, and etc) into app/src/main/assets.

# graph 생성 명령어 (/mediapipe/graphs/tracking/BUILD file의 mediapipe_binary_graph의 name 참고)

bazel build -c opt mediapipe/graphs/custom_tracking:mobile_gpu_binary_graph

# graph 생성 위치 : /path/to/your/mediapipe/bazel-bin/mediapipe/graphs/custom_tracking

ex> /Users/yeon/workspace/mediapipe/bazel-bin/mediapipe/graphs/custom_tracking

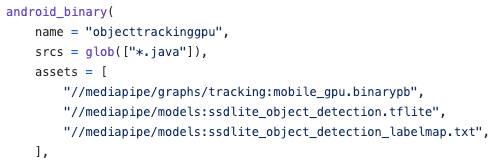

# tracking solution에 필요한 model (/mediapipe/examples/android/src/java/com/google/mediapipe/apps/objecttrackinggpu/BUILD file의 android binary의 assets 참고하여 해당 모델을 안드로이드 프로젝트 assets 폴더에 넣으면 됨)

# graph 생성 명령어 (/mediapipe/graphs/tracking/BUILD file의 mediapipe_binary_graph의 name 참고)

bazel build -c opt mediapipe/graphs/face_detection:mobile_gpu_binary_graph

# graph 생성 위치 : /path/to/your/mediapipe/bazel-bin/mediapipe/graphs/face_detection

# tracking solution에 필요한 model

(/mediapipe/examples/android/src/java/com/google/mediapipe/apps/face_detection

/BUILD file의 android binary의 assets 참고하여 해당 모델을 안드로이드 프로젝트 assets 폴더에 넣으면 됨)

4. OpenCV library를 “/path/to/your/app/src/main/jniLibs/”에 넣기

OpenCV install link : here

5. Modify app/build.gradle to add MediaPipe dependencies and MediaPipe AAR.

|

dependencies { |